An Autonomous Vision-Based Target Tracking System for Rotorcraft Unmanned Aerial Vehicles

Paper

- Hui Cheng, Lishan Lin, Zhuoqi Zheng, Yuwei Guan and Zhongchang Liu, An Autonomous Vision-Based Target Tracking System for Rotorcraft Unmanned Aerial Vehicles, IROS 2017 Paper

Abstract

In this paper, an autonomous vision-based tracking system is presented to track a maneuvering target for a rotorcraft unmanned aerial vehicle (UAV) with an onboard gimbal camera. To handle target occlusions or loss for real-time tracking, a robust and computationally efficient visual tracking scheme is considered using the Kernelized Correlation Filter (KCF) tracker and the redetection algorithm. The states of the target are estimated from the visual information. Moreover, feedback control laws of the gimbal and the UAV using the estimated states are proposed for the UAV to track the moving target autonomously. The algorithms are implemented on an onboard TK1 computer, and extensive outdoor flight experiments have been performed. Experimental results show that the proposed computationally efficient visual tracking scenario can stably track a maneuvering target and is robust to target occlusions and loss.

Framework

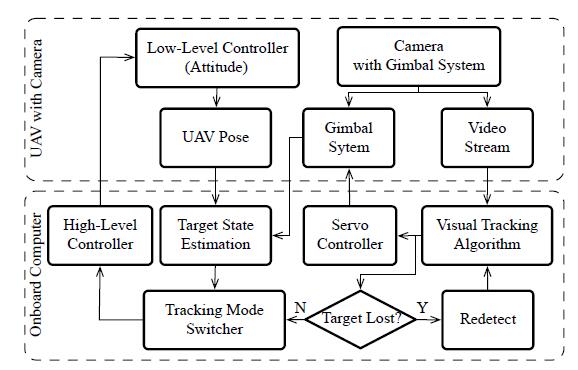

A DJI Matrice100 is used as the UAV platform, which is equipped with an onboard TK1 computer and a monocular RGB gimbal camera. An overview of the system configuration is shown in figure below. The gimbal camera mounted on the UAV platform provides the video stream and internal angles for the onboard computer. The visual tracking algorithm obtains position of the target on the image plane, which is feedback to the gimbal controller. In addition, the states of the target are estimated by fusing the inertia measurement unit (IMU) data of the UAV platform and the gimbal. A switching tracking strategy is performed based on the estimated states. The high-level controller computes the desired velocities of the UAV, and the low-level controller controls the attitude correspondingly. The frequencies of the video stream and the control signal are 30Hz and 10Hz, respectively.

Architecture of the vision-based tracking system.

Experiments

The performance of the visual tracking scheme is evaluated on several videos collected using the UAV. Three cases are considered, i.e., no occlusion, partial occlusion and full occlusion.

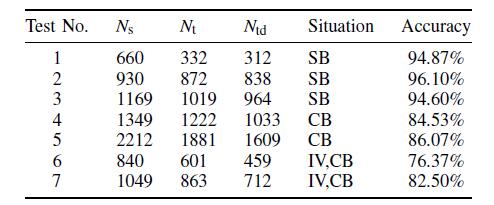

TABLE: Visual tracking results while the target with occlusion in different

scenarios. Ns is the total number of frames in each test, Nt is the number of

frames containing the target, Ntd is the number of frames where the target

is correctly tracked and redetected. SB: Simple Background; CB: Complex

Background; IV: Illumination Variation.

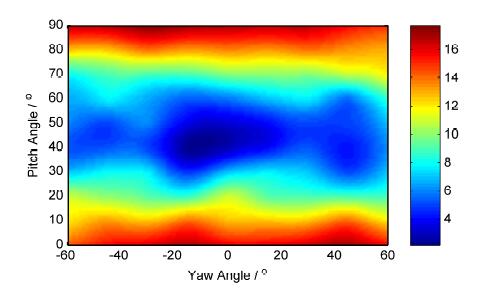

Figure below indicates that the accuracy of the target state estimation decreases along with the increasing of the pitch angles.

The pseudocolor plot of relative distance estimation errors (%).

The test is under a certain range of the gimbal’s angle: -60 deg to 60 deg of

yaw angle and 20 deg to 70 deg of pitch angle. The distribution of the error can

be seen from the legend on the right side of the figure.

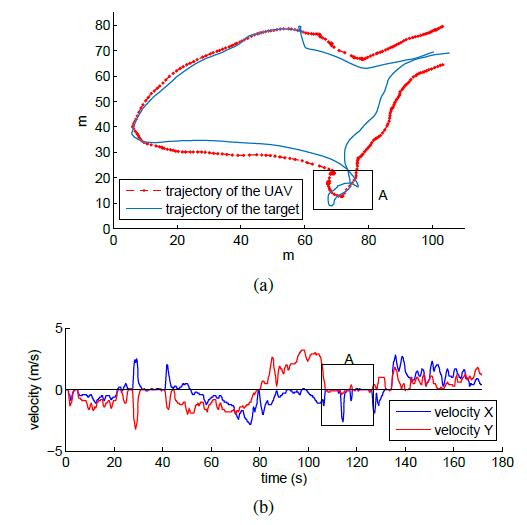

The UAV tracks the maneuvering person in unstructured outdoor environments and the trajectories of the target person and UAV are shown below.

(a) Trajectories of the UAV(red) and the target(blue). Their position

information is derived from the GNSS; (b)UAV’s of the x direction and y

direction velocities in the body coordinates.

References

[1] J. F. Henriques, R. Caseiro, P. Martins, and J. Batista, “High-speed tracking with kernelized correlation filters,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 37, no. 3, pp. 583-596, 2015.

[2] F. Lin, X. Dong, B. M. Chen, K.-Y. Lum, and T. H. Lee, “A robust realtime embedded vision system on an unmanned rotorcraft for ground target following,” IEEE Transactions on Industrial Electronics, vol. 59, no. 2, pp. 1038-1049, 2012.

[3] L. A. Johnston and V. Krishnamurthy, “An improvement to the interacting multiple model (imm) algorithm,” IEEE Transactions on Signal Processing, vol. 49, no. 12, pp. 2909-2923, 2001.

[4] X. R. Li and V. P. Jilkov, “Survey of maneuvering target tracking. part i. dynamic models,” IEEE Transactions on Aerospace Electronic Systems, vol. 39, no. 4, pp. 1333-1364, 2004.