Generalized Similarity Measure Learning

Paper

- Liang Lin, Guangrun Wang, Wangmeng Zuo, Xiangchu Feng, and Lei Zhang, “Cross-Domain Visual Matching via Generalized Similarity Measure and Feature Learning”, IEEE Transctions on Pattern Analysis and Machine Intelligence (T-PAMI), DOI: 10.1109/TPAMI.2016.2567386, 2016. Paper

Download

If you use the resources here, please consult and consider citing the paper above. BibTex_baiduyun BibTex_googledrive

### Code

The training and test codes have been released here. (There is a test demo in the code.) code_baiduyun or code_googledrive

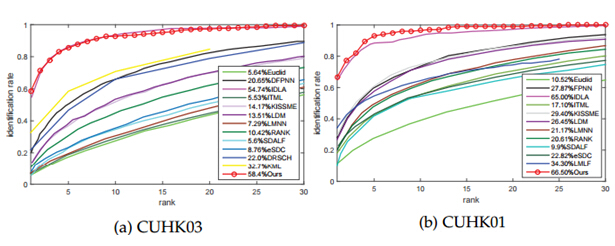

### Performance

The generalized similarity code currently achieves a better performance on the CUHK03 person re-identification task, compared with the result reported in the PAMI paper. The CMC curves of the PAMI paper can be downloaded here. CMC_curves_baiduyun or CMC_curves_googledrive

### Pre-trained models

We have released several trained models and corresponding prototxt files here. model_baiduyun or model_googledrive

Please check the README document in the training and test codes for more details. REMEADME_baiduyun or README_googledrive

Introduction

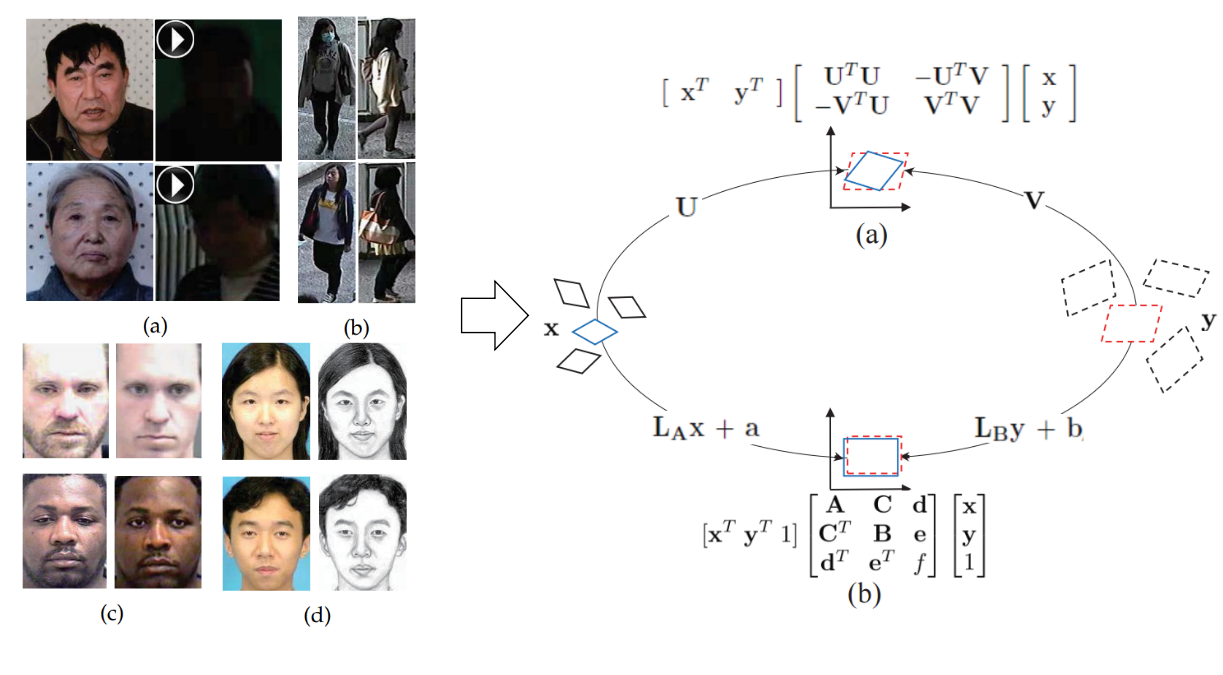

Fig. 1 Left: typical examples of matching cross-domain visual data; Right: : illustration of the generalized similarity model.

As is shown on the left of Fig. 1, to investigate the cross-domain visual matching problem, we use four typical tasks as examples, (a) faces from still images and videos, (b) front- and side-view persons, (c) older and younger faces and (d) photo and sketch faces. We propose a generalized similarity to model this problem, as is illustrated on the right of Fig. 1. Conventional approaches project data by simply using the linear similarity transformations (i.e., U and V), as illustrated in (a), where Euclidean distance is applied as the distance metric. As illustrated in (b), we improve existing models by i) expanding the traditional linear similarity transformation into an affine transformation and ii) fusing Mahalanobis distance and Cosine similarity. One can see that the case in (a) is a simplified version of our model. Please refer to the paper for the deduction details.

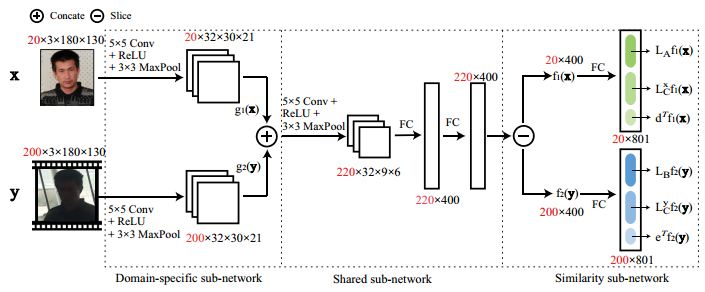

Deep Architecture

Fig. 2 Deep architecture of our similarity model. This architecture is comprised of three parts: domain-specific sub-network, shared sub-network and similarity sub-network. The first two parts extract feature representations from samples of different domains, which are built upon a number of convolutional layers, max-pooling operations and fully-connected layers. The similarity sub-network includes two structured fully-connected layers that incorporate the similarity components. See the paper for detail.

Experiments

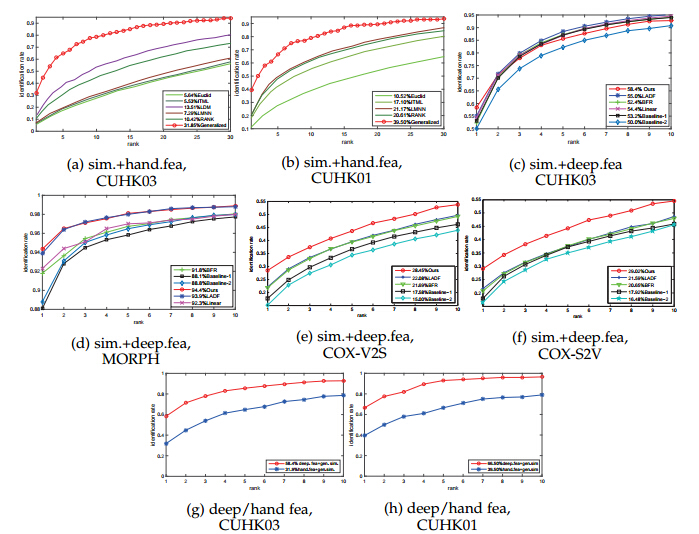

Fig 3. Person re-identification task.

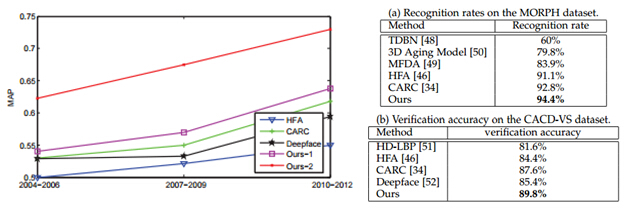

Fig. 4 Age-invariant face recognition task. The CMC curves are results on CACD Dataset.

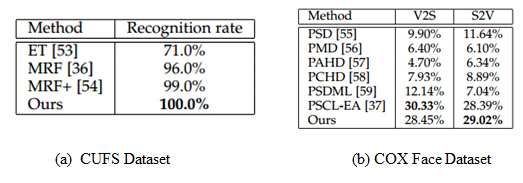

Fig 5. (a) Photo-to-sketch face recognition task and (b) Still-video face recognition task.

Fig.6 Ablation studies.

References

[1] S. Ding, L. Lin, G. Wang, and H. Chao, “Deep feature learning with relative distance comparison for person re-identification,” Pattern Recognition, 2015.